Prerequisites (click to view)

graph LR

ALG(["fas:fa-trophy Sorted Array fas:fa-trophy #160;"])

ASY_ANA(["fas:fa-check Asymptotic Analysis #160;"])

click ASY_ANA "./math-asymptotic-analysis"

ARRAY(["fas:fa-check Array #160;"])

click ARRAY "./list-data-struct-array"

ASY_ANA-->ALG

ARRAY-->ALG

Sorted arrays, that is arrays arranged in chronological, numerical, alphabetical, or some other order, yield more utility than randomly ordered arrays. For instance, search operations drop from $O(n)$ to $(\log_2{n}$). The trick here is using the binary search algorithm (outlined in pseudo code below) which only works with sorted data. This is likely one of the most ubiquitous algorithms in the world as it is useful for both computing as well as everyday tasks. In addition to more performant searching, each of the operations below are constant ($O(1)$) time1 with sorted arrays and at least linear time ($\Theta(n)$) with randomly ordered arrays.

- Min: Find the minimum value in the array

- Max: Find the maximum value in an array

- Predecessor: Find the item ordered directly before an arbitrary item. For

example, in the array

[9,12,10,4]the predecessor of10is9. - Successor: Find the item ordered directly after an arbitrary item. For

example, in the array

[9,12,10,4]the successor of10is12. - Rank: Find the ordered position of any specific item in the array. For

instance, in the array

[9,12,10,4]the rank of10is 3.

History

The afforded utility of pre-sorted data has long been known. In fact, sorting was a primary motivation for industry pioneers. The lineage of modern computing machines is directly traceable to the eponymous Hollerith tabulation machines used to sort data held on punch cards for the 1890 census. The first routine ever written for a stored-program computer (EDVAC) was a sorting routine.2

Sorting to enable searching isn’t sequestered to the computing field. Examples of the binary search algorithm date back as far as 200 BCE with the Inakibit-Anu tablet from Babylon3. The distinction of first published reference to binary search in a computing context goes to John Mauchly from his 1946 paper entitled Theory and Techniques for the Design of Electronic Digital Computers4.

Of course, sorting is not free; the cost must be amortized across all uses of the data structure. Sorting algorithms are one of the most scrutinized areas of computer science and they are the topic of another section. For the purposes at hand, accept that the cost of sorting is $O(n \log_2{n})$5. As an example, suppose you needed to find the minimum value in an array. This is an $\Theta(n)$ operation on an unsorted array which is lower than the $O(n \log_2{n})$ cost of sorting. Conversely, consider a scenario where you must identify the predecessor of each array item. The time cost without sorting is $\Theta(n^2)$, that is one predecessor operation for every item in the array. Pre sorting results in a single $O(n \log_2{n})$ sort operation and $n$ constant time predecessor operations which yields a total of $O(n \log_2{n} + n)$. Sorting is a superior approach in this instance.

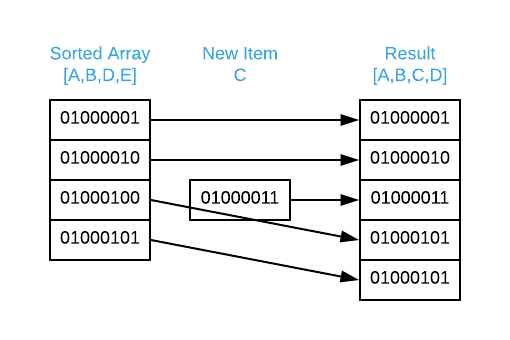

Another cogent concern is insertions. The array page outlined the inherit problem of adding items to a contiguous section of memory. Insert and delete are $O(n)$ operations for both sorted and randomly ordered arrays; however, the hidden constants are much higher in the sorted case. Maintaining sort order compounds the complications because the algorithm must determine the placement of the item and shift existing items to accommodate it. This is illustrated in the image below.

Key Takeaways

- The divergence of utility between sorted and randomly ordered arrays is sufficient to consider them to be different data structures

-

Asymptotic complexity comparison:

Operation Sorted Randomly Ordered Search $O(\log_2{n})$ $O(n)$ Min $O(1)$ $\Theta(n)$ Max $O(1)$ $\Theta(n)$ Predecessor $O(1)$ $\Theta(n)$ Successor $O(1)$ $\Theta(n)$ Rank $O(1)$ $\Theta(n)$ - The insert and delete operations are more complex on sorted arrays than they are on randomly ordered arrays

- Array sorting time must be amortized across all data structure operations

Pseudo Code

Operations that are identical to randomly sorted arrays are ommited.

\begin{algorithm}

\caption{Sorted Array Operations}

\begin{algorithmic}

\REQUIRE A = array sorted sequentially

\REQUIRE value = value to search for

\OUTPUT value if it exists, otherwise $\text{NOT_FOUND}$

\FUNCTION{binarySearch}{A, value}

\IF{$\vert A \vert \leq 0$}

\RETURN $\text{NOT_FOUND}$

\ENDIF

\STATE half $\gets \lfloor\frac{\vert A \vert}{2}\rfloor$

\IF{$A_{half} = $ value}

\RETURN $A_{half}$

\ENDIF

\IF{$A_{half} \lt $ value}

\RETURN \CALL{binarySearch}{$A_{half + 1}...A_{\vert A \vert - 1}$, value}

\ENDIF

\RETURN \CALL{binarySearch}{$A_0...A_{half-1}$, value}

\ENDFUNCTION

\STATE

\REQUIRE A = array sorted sequentially

\REQUIRE item = item to insert into the array

\OUTPUT $A \cup$ item with sort order maintained

\FUNCTION{insert}{A, item}

\STATE $A^{\prime} \gets$ memory allocation of size $\vert A \vert + 1$

\FOR{$i \gets 0$ to $\vert A \vert - 1$}

\STATE \COMMENT{0 and max size cases are omitted for the sake of brevity}

\IF{item $\gt A_{i-1}$ and item $\lt A_i$}

\STATE copy item to $A^{\prime}$

\ENDIF

\STATE copy $A_i$ to $A^{\prime}$

\ENDFOR

\RETURN $A^{\prime}$

\ENDFUNCTION

\STATE

\REQUIRE A = array sorted sequentially

\OUTPUT $\min{A}$

\FUNCTION{min}{A}

\RETURN $A_0$

\ENDFUNCTION

\STATE

\REQUIRE A = array sorted sequentially

\OUTPUT $\max{A}$

\FUNCTION{max}{A}

\RETURN $A_{\vert A \vert - 1}$

\ENDFUNCTION

\STATE

\REQUIRE A = array sorted sequentially

\REQUIRE item = reference item

\OUTPUT item immediately preceding item

\FUNCTION{predecessor}{A, item}

\STATE $s$ = item size

\STATE $a$ = address of item

\RETURN item located at $a-s$

\ENDFUNCTION

\STATE

\REQUIRE A = array sorted sequentially

\REQUIRE item = reference item

\OUTPUT item immediately following item

\FUNCTION{successor}{A, item}

\STATE $s$ = item size

\STATE $a$ = address of item

\RETURN item located at $a+s$

\ENDFUNCTION

\STATE

\REQUIRE A = array sorted sequentially

\REQUIRE item = reference item

\OUTPUT index of item

\FUNCTION{rank}{A, item}

\STATE $a$ = base address of A

\STATE $a_i$ = address of item

\STATE $s$ = item size

\RETURN $\frac{a_i - a}{s}$

\ENDFUNCTION

\end{algorithmic}

\end{algorithm}

Implementation Details

The pseudo code indicates the use of comparison operators ($=, \lt, \gt, \text{etc…}$) to determine the relative positioning of array items. This makes for easily consumable examples but is not a recommended real-world practice. Hard-coded comparisons prevent reuse. Suppose you had one array sorted in ascending order and the other in descending. Using this approach would force you to recreate your array algorithms for each sort order. There would be even more duplication for different data types.

By convention, the solution is to supply a comparator function pointer as an argument. A comparator accepts two items and returns 0 if they are equal, a positive number if the second items should be sorted after the first, and a negative number if the second item should come before the first. Using this method, the algorithms are generic enough to work for any sort order and any data type. The code below is an simplistic example of the technique6.

#include <stdio.h> typedef int (*comparator)(const void* x, const void* y); int LargestIntFirst(const void* x, const void* y) { return *(int*)y - *(int*)x; } int SmallestIntFirst(const void* x, const void* y) { return *(int*)x - *(int*)y; } int LargestCharFirst(const void* x, const void* y) { return *(char*)y - *(char*)x; } int SmallestCharFirst(const void* x, const void* y) { return *(char*)x - *(char*)y; } void PrintInOrder(void* x, void* y, comparator comparator) { int comp_result = comparator(&x, &y); if (comp_result <= 0) printf("y-x\n"); else printf("x-y\n"); } int main() { int x = 5; int y = 10; PrintInOrder(&x, &y, LargestIntFirst); PrintInOrder(&x, &y, SmallestIntFirst); char char_x = 'a'; char char_y = 'b'; PrintInOrder(&char_x, &char_y, LargestCharFirst); PrintInOrder(&char_x, &char_y, SmallestCharFirst); }

Asymptotic Complexity

- Search: $O(\log_{2}n)$

- Min: $O(1)$

- Max: $O(1)$

- Predecessor: $O(1)$

- Successor: $O(1)$

- Rank: $O(1)$

Advantages

- Array Advantages: All of the advantages of standard arrays

- Search: Optimized for quick search operations

- Utility: Many useful constant time operations (min, max, predecessor, successor, and rank)

Disadvantages

- Array Disadvantages: All of the disadvantages of standard arrays

- Insert: Insert operations are more complex because they must maintain sort order.

- Sort Time: The fastest an array can be sorted is $O(n \log_{2}n)$. This time must be calculated into any algorithm hoping to capitalize on the added utility.

Source Code

Relevant Files:

Click here for build and run instructions

Exercises

-

What is the difference between the direct addressing formula for a sorted array and a randomly ordered array.

Answers (click to expand)

Absolutely nothing...

-

Identify a common, non-software related task where the binary search algorithm is naturally employed.

Answers (click to expand)

There are many correct answers. One example is looking up a word in a printed dictionary. Suppose you are trying to find the word rakish. You open up the dictionary to a page that has words starting with the letter s which prompts you to open an earlier page. Suppose this time you land on a page of p words. The next step is to try a page in between the two you have already tried. The process plays out as such until you find the coveted word.

-

Using the supplied binary search pseudo code, indicate the number of equality operations (line 6) required to locate each value listed below when \(A = \{3,6,9,12,15,18,21,28,32\}\).

a. 18

b. 3

c. 28

d. 2Answers (click to expand)

- 4 total equality operations: 15, 28, 21, and finally 18

- 4 total equality operations: 15, 9, 6, and finally 3

- 2 total equality operations: 15 and 28

- 4 total equality operations: 15, 9, 6, 3, and then return NOT_FOUND

-

If you need to find the maximum and minimum values in an array, is pre-sorting advantageous?

Answers (click to expand)

The combined cost of the min and max operations is $\Theta(2n)$ or simply $\Theta(n)$. Pre-sorting incurs the cost of a sort operation and two constant operations for a total of $O(n \log_2{n} + 2)$ or just $O(n \log_2{n})$. It's most likely not worth the cost of presorting. It may be worth experimentation because the constants hidden by asymptotic analysis will come into play.

For a quick reality check, considered in a vacuum, the two options are close enough that it's not worth an optimization either way. Choose whichever makes your code less complex. -

Assuming sorting isn’t an option, define the pseudo code for the following operations.

a. Find the minimum value in a randomly ordered array.

b. Find the successor of an arbitrary item in a randomly ordered array.

c. Find the rank of an arbitrary item in a randomly ordered arrayFor formatting purposes, the answers for question 5 are located here.

-

Assuming you know the address of the item in question and therefore do not have to search the array. ↩

-

The Art of Computer Programming: Volume 3, Sorting and Searching, Second Edition, p. 385 ↩

-

Ancient Babylonian Algorithms by Donald E. Knuth. Available online at https://dl.acm.org/doi/10.1145/361454.361514 ↩

-

Available from Amazon at: https://www.amazon.com/Moore-School-Lectures-Techniques-Electronic/dp/0262031094 ↩

-

As the sorting algorithms section will make clear, there are exceptions to this. Regardless, this is generally true. ↩

-

Null guards omitted for brevity. ↩